Physician User Study on Explainable AI

A usability study to identify physicians’ needs and requirements for explainable AI

Study Title:

A usability study to identify physicians’ needs and requirements of explainable artificial intelligence

Study Description:

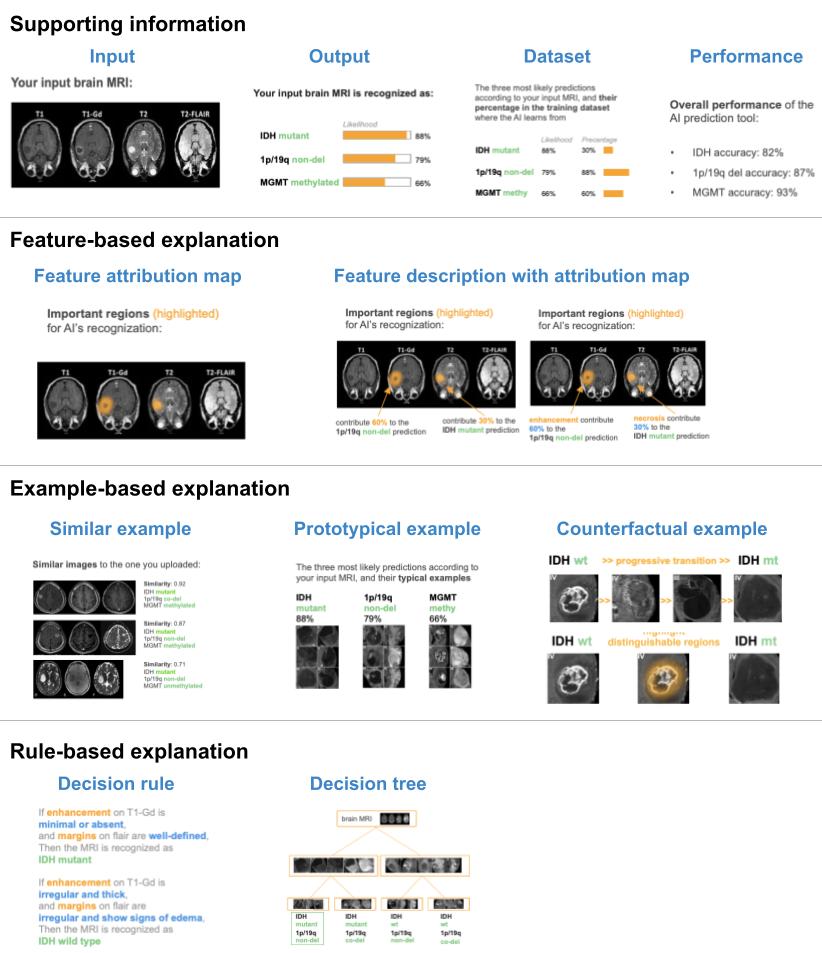

Although AI (artificial intelligence) especially deep learning technologies have the potential to augment clinicians’ abilities, its implementation in patient-care settings has not yet become widespread. One major impediment is the black-box nature of AI.

Thus, it is important to design explainable AI that is optimized not only for its expected clinical task performance, but also for its interpretability, i.e., the ability to explain its reasoning in understandable terms to its users.

The study is to understand the needs and requirements of AI from doctors, so that to inform the development of AI systems for clinical decision-support.

SFU Ethics number: 2019s0108