publications

2024

- AIIM

Evaluating the clinical utility of artificial intelligence assistance and its explanation on the glioma grading taskJin, Weina, Fatehi, Mostafa, Guo, Ru, and Hamarneh, GhassanArtificial Intelligence in Medicine 2024

Evaluating the clinical utility of artificial intelligence assistance and its explanation on the glioma grading taskJin, Weina, Fatehi, Mostafa, Guo, Ru, and Hamarneh, GhassanArtificial Intelligence in Medicine 2024Weina Jin and Mostafa Fatehi are co-first authors

TLDR: A clinical user study with 35 neurosurgeons and identified that AI and explainable AI are not helpful in the collaborative doctor-AI clinical settings.

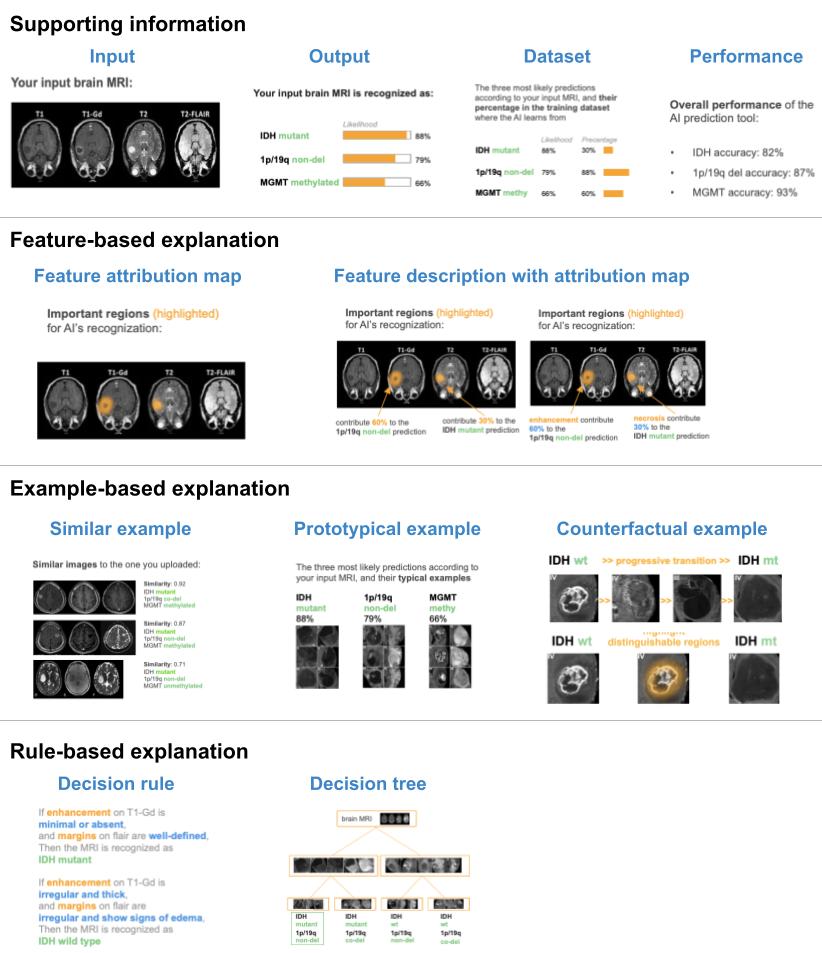

Clinical evaluation evidence and model explainability are key gatekeepers to ensure the safe, accountable, and effective use of artificial intelligence (AI) in clinical settings. We conducted a clinical user-centered evaluation with 35 neurosurgeons to assess the utility of AI assistance and its explanation on the glioma grading task. Each participant read 25 brain MRI scans of patients with gliomas, and gave their judgment on the glioma grading without and with the assistance of AI prediction and explanation. The AI model was trained on the BraTS dataset with 88.0% accuracy. The AI explanation was generated using the explainable AI algorithm of SmoothGrad, which was selected from 16 algorithms based on the criterion of being truthful to the AI decision process. Results showed that compared to the average accuracy of 82.5±8.7% when physicians performed the task alone, physicians’ task performance increased to 87.7±7.3% with statistical significance (p-value = 0.002) when assisted by AI prediction, and remained at almost the same level of 88.5±7.0% (p-value = 0.35) with the additional assistance of AI explanation. Based on quantitative and qualitative results, the observed improvement in physicians’ task performance assisted by AI prediction was mainly because physicians’ decision patterns converged to be similar to AI, as physicians only switched their decisions when disagreeing with AI. The insignificant change in physicians’ performance with the additional assistance of AI explanation was because the AI explanations did not provide explicit reasons, contexts, or descriptions of clinical features to help doctors discern potentially incorrect AI predictions. The evaluation showed the clinical utility of AI to assist physicians on the glioma grading task, and identified the limitations and clinical usage gaps of existing explainable AI techniques for future improvement.

@article{JIN2024102751, title = {Evaluating the clinical utility of artificial intelligence assistance and its explanation on the glioma grading task}, journal = {Artificial Intelligence in Medicine}, volume = {148}, pages = {102751}, year = {2024}, issn = {0933-3657}, doi = {https://doi.org/10.1016/j.artmed.2023.102751}, url = {https://www.sciencedirect.com/science/article/pii/S0933365723002658}, author = {Jin, Weina and Fatehi, Mostafa and Guo, Ru and Hamarneh, Ghassan}, keywords = {Artificial intelligence, Neuro-imaging, Neurosurgery, Explainable artificial intelligence, Clinical study, Human-centered artificial intelligence}, video = {https://youtu.be/rhLQQsc8Z8Y}, project = {brain_ai_clinical_study}, }

2023

- MedIA

Guidelines and evaluation of clinical explainable AI in medical image analysisJin, Weina, Li, Xiaoxiao, Fatehi, Mostafa, and Hamarneh, GhassanMedical Image Analysis 2023

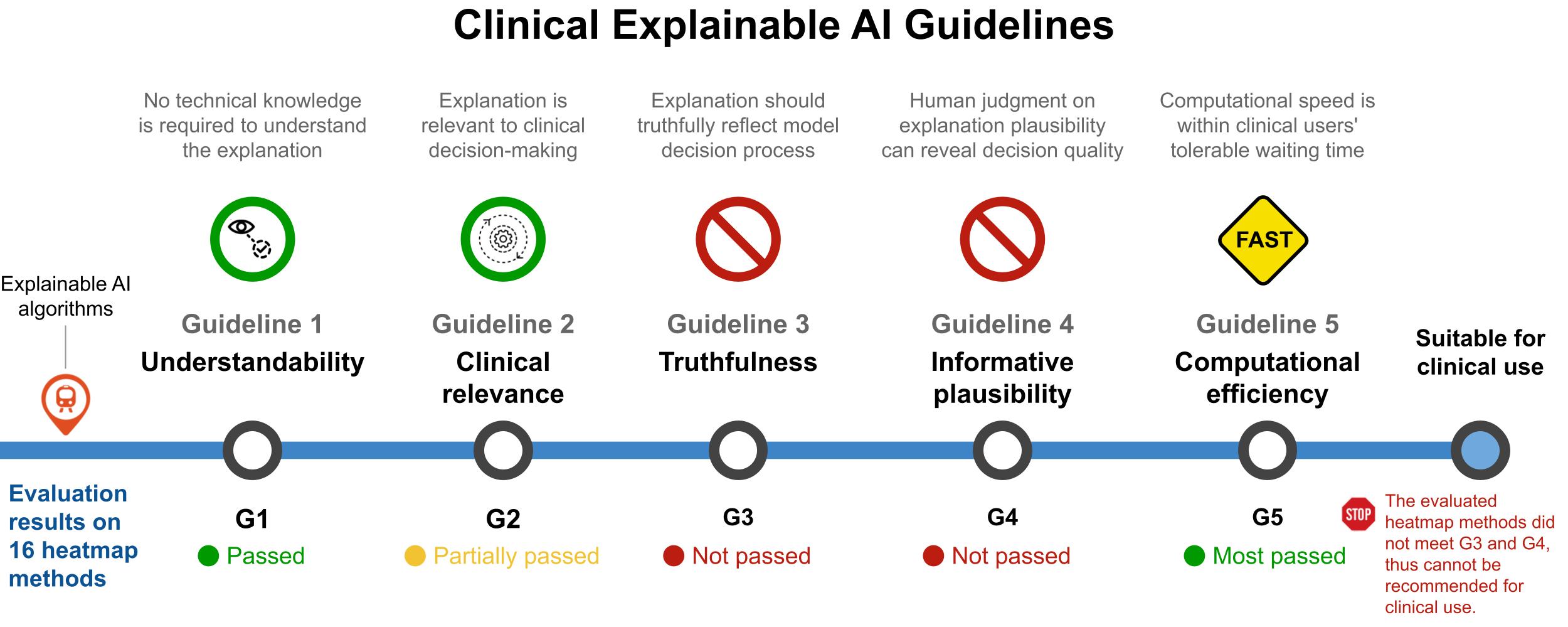

Guidelines and evaluation of clinical explainable AI in medical image analysisJin, Weina, Li, Xiaoxiao, Fatehi, Mostafa, and Hamarneh, GhassanMedical Image Analysis 2023TLDR: The Clinical XAI Guidelines provides criteria that explanation should fulfill in critical decision support.

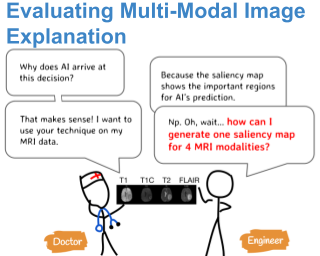

Explainable artificial intelligence (XAI) is essential for enabling clinical users to get informed decision support from AI and comply with evidence-based medical practice. Applying XAI in clinical settings requires proper evaluation criteria to ensure the explanation technique is both technically sound and clinically useful, but specific support is lacking to achieve this goal. To bridge the research gap, we propose the Clinical XAI Guidelines that consist of five criteria a clinical XAI needs to be optimized for. The guidelines recommend choosing an explanation form based on Guideline 1 (G1) Understandability and G2 Clinical relevance. For the chosen explanation form, its specific XAI technique should be optimized for G3 Truthfulness, G4 Informative plausibility, and G5 Computational efficiency. Following the guidelines, we conducted a systematic evaluation on a novel problem of multi-modal medical image explanation with two clinical tasks, and proposed new evaluation metrics accordingly. Sixteen commonly-used heatmap XAI techniques were evaluated and found to be insufficient for clinical use due to their failure in G3 and G4. Our evaluation demonstrated the use of Clinical XAI Guidelines to support the design and evaluation of clinically viable XAI.

@article{JIN2023102684, title = {Guidelines and evaluation of clinical explainable AI in medical image analysis}, journal = {Medical Image Analysis}, volume = {84}, pages = {102684}, year = {2023}, issn = {1361-8415}, doi = {https://doi.org/10.1016/j.media.2022.102684}, url = {https://www.sciencedirect.com/science/article/pii/S1361841522003127}, author = {Jin, Weina and Li, Xiaoxiao and Fatehi, Mostafa and Hamarneh, Ghassan}, keywords = {Interpretable machine learning, Medical image analysis, Multi-modal medical image, Explainable AI evaluation}, project = {xai_eval}, } - MethodsX

Generating post-hoc explanation from deep neural networks for multi-modal medical image analysis tasksJin, Weina, Li, Xiaoxiao, Fatehi, Mostafa, and Hamarneh, GhassanMethodsX 2023

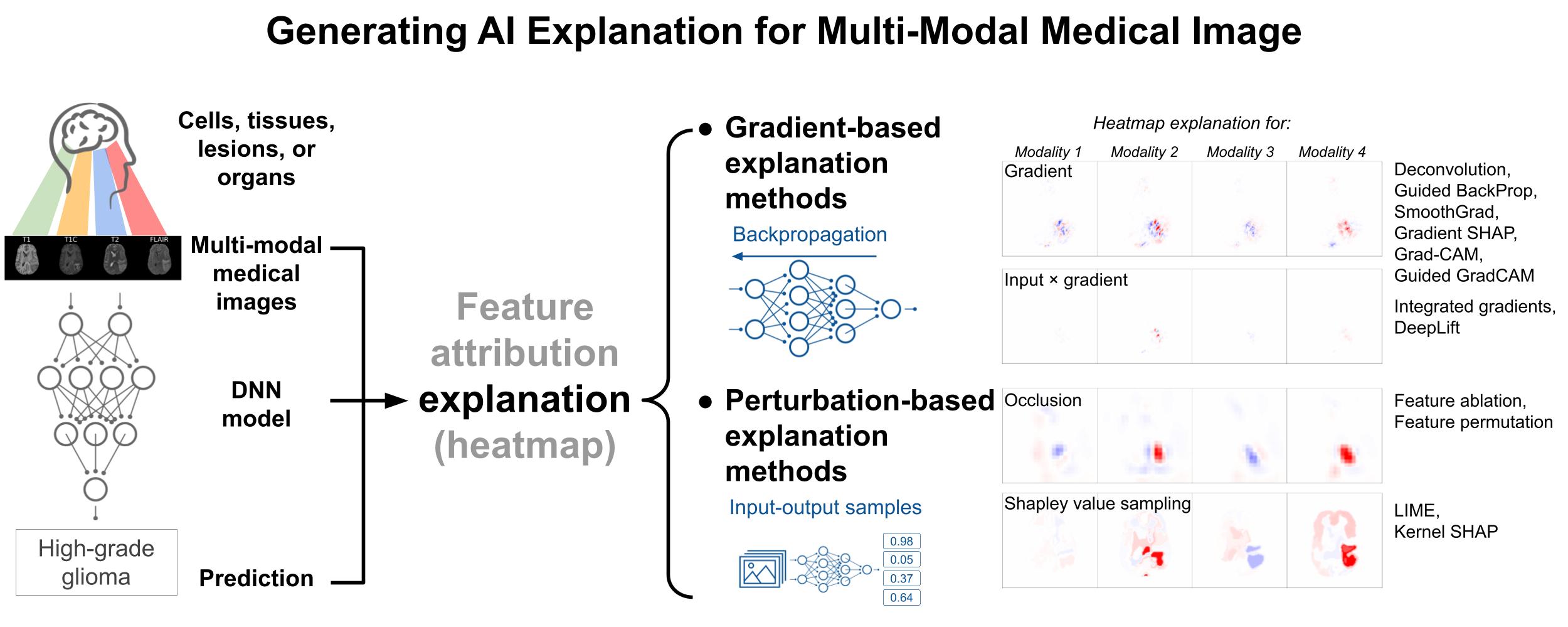

Generating post-hoc explanation from deep neural networks for multi-modal medical image analysis tasksJin, Weina, Li, Xiaoxiao, Fatehi, Mostafa, and Hamarneh, GhassanMethodsX 2023TLDR: Describing the methods of generating AI heatmap explanations for multi-modal medical images.

Explaining model decisions from medical image inputs is necessary for deploying deep neural network (DNN) based models as clinical decision assistants. The acquisition of multi-modal medical images is pervasive in practice for supporting the clinical decision-making process. Multi-modal images capture different aspects of the same underlying regions of interest. Explaining DNN decisions on multi-modal medical images is thus a clinically important problem. Our methods adopt commonly-used post-hoc artificial intelligence feature attribution methods to explain DNN decisions on multi-modal medical images, including two categories of gradient- and perturbation-based methods. • Gradient-based explanation methods – such as Guided BackProp, DeepLift – utilize the gradient signal to estimate the feature importance for model prediction. • Perturbation-based methods – such as occlusion, LIME, kernel SHAP – utilize the input-output sampling pairs to estimate the feature importance. • We describe the implementation details on how to make the methods work for multi-modal image input, and make the implementation code available.

@article{JIN2023102009, title = {Generating post-hoc explanation from deep neural networks for multi-modal medical image analysis tasks}, journal = {MethodsX}, volume = {10}, pages = {102009}, year = {2023}, issn = {2215-0161}, doi = {https://doi.org/10.1016/j.mex.2023.102009}, url = {https://www.sciencedirect.com/science/article/pii/S2215016123000146}, author = {Jin, Weina and Li, Xiaoxiao and Fatehi, Mostafa and Hamarneh, Ghassan}, keywords = {Interpretable machine learning, Explainable artificial intelligence, Medical image analysis, Multi-modal medical image, Post-hoc explanation}, project = {xai_eval}, }

2022

- AAAI

Evaluating Explainable AI on a Multi-Modal Medical Imaging Task: Can Existing Algorithms Fulfill Clinical Requirements?Jin, Weina, Li, Xiaoxiao, and Hamarneh, GhassanProceedings of the AAAI Conference on Artificial Intelligence Jun 2022

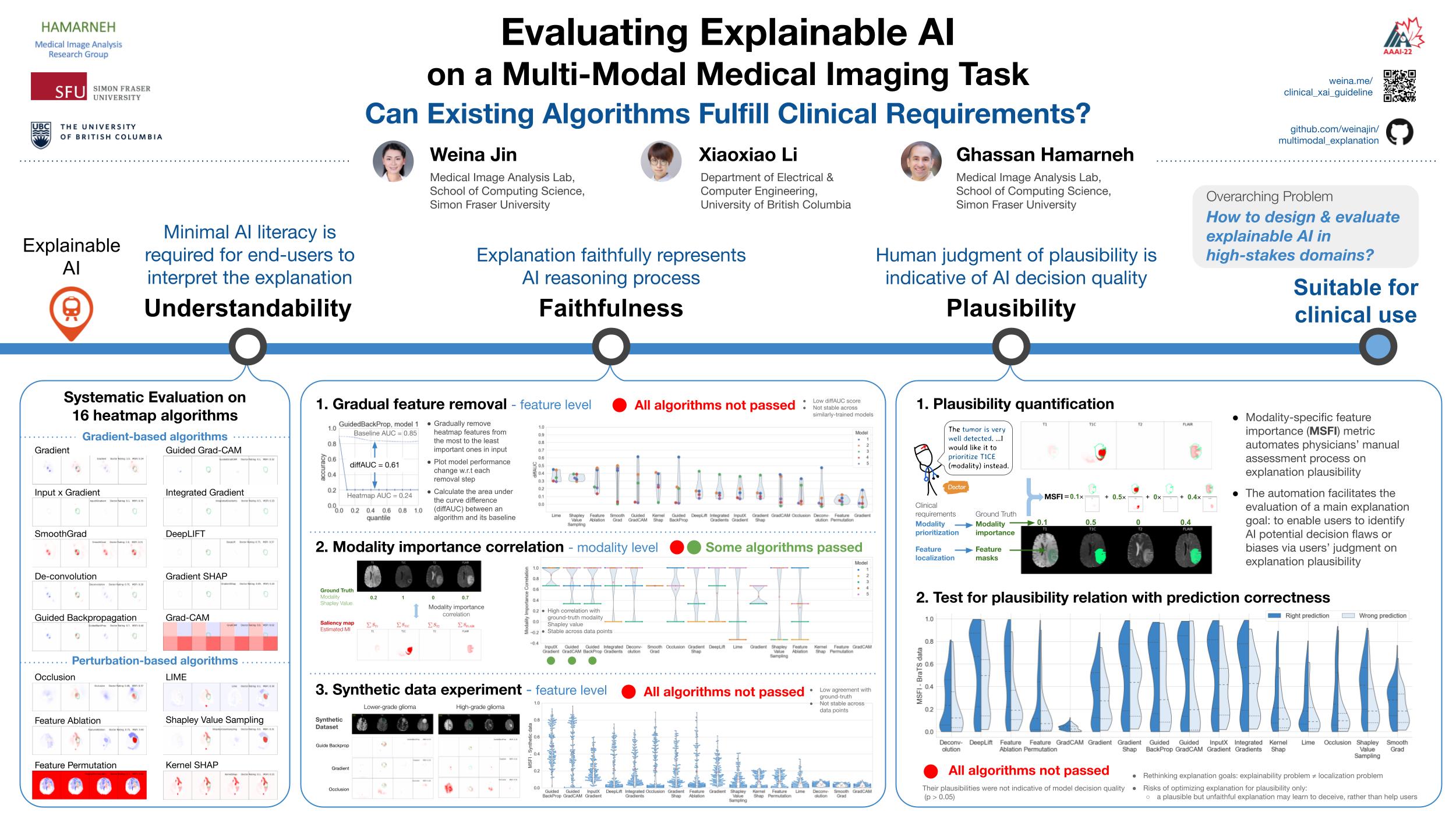

Evaluating Explainable AI on a Multi-Modal Medical Imaging Task: Can Existing Algorithms Fulfill Clinical Requirements?Jin, Weina, Li, Xiaoxiao, and Hamarneh, GhassanProceedings of the AAAI Conference on Artificial Intelligence Jun 2022Acceptance rate: 15%

TLDR: Our systematic evaluation showed the examined 16 heatmap algorithms failed to fulfill clinical requirements to correctly indicate AI model decision process or decision quality.

Being able to explain the prediction to clinical end-users is a necessity to leverage the power of artificial intelligence (AI) models for clinical decision support. For medical images, a feature attribution map, or heatmap, is the most common form of explanation that highlights important features for AI models’ prediction. However, it is unknown how well heatmaps perform on explaining decisions on multi-modal medical images, where each image modality or channel visualizes distinct clinical information of the same underlying biomedical phenomenon. Understanding such modality-dependent features is essential for clinical users’ interpretation of AI decisions. To tackle this clinically important but technically ignored problem, we propose the modality-specific feature importance (MSFI) metric. It encodes clinical image and explanation interpretation patterns of modality prioritization and modality-specific feature localization. We conduct a clinical requirement-grounded, systematic evaluation using computational methods and a clinician user study. Results show that the examined 16 heatmap algorithms failed to fulfill clinical requirements to correctly indicate AI model decision process or decision quality. The evaluation and MSFI metric can guide the design and selection of explainable AI algorithms to meet clinical requirements on multi-modal explanation.

@article{Jin_Li_Hamarneh_2022, title = {Evaluating Explainable AI on a Multi-Modal Medical Imaging Task: Can Existing Algorithms Fulfill Clinical Requirements?}, volume = {36}, url = {https://ojs.aaai.org/index.php/AAAI/article/view/21452}, doi = {10.1609/aaai.v36i11.21452}, number = {11}, journal = {Proceedings of the AAAI Conference on Artificial Intelligence}, author = {Jin, Weina and Li, Xiaoxiao and Hamarneh, Ghassan}, year = {2022}, month = jun, pages = {11945-11953}, project = {xai_eval_aaai}, } -

What Explanations Do Doctors Require From Artificial Intelligence?Jin, Weina, and Hamarneh, GhassanManuscript in preparation Jun 2022

What Explanations Do Doctors Require From Artificial Intelligence?Jin, Weina, and Hamarneh, GhassanManuscript in preparation Jun 2022@article{jin2022doctorUserStudy, author = {Jin, Weina and Hamarneh, Ghassan}, journal = {Manuscript in preparation}, title = {What Explanations Do Doctors Require From Artificial Intelligence?}, year = {2022}, project = {jin2022doctorUserStudy}, }

2021

- ICML-w

One Map Does Not Fit All: Evaluating Saliency Map Explanation on Multi-Modal Medical ImagesJin, Weina, Li, Xiaoxiao, and Hamarneh, GhassanICML 2021 Workshop on Interpretable Machine Learning in Healthcare Jun 2021

One Map Does Not Fit All: Evaluating Saliency Map Explanation on Multi-Modal Medical ImagesJin, Weina, Li, Xiaoxiao, and Hamarneh, GhassanICML 2021 Workshop on Interpretable Machine Learning in Healthcare Jun 2021Spotlight paper (top 10%), oral presentation

TLDR: The precursor of the AAAI22’ paper - Evaluating Explainable AI on a Multi-Modal Medical Imaging Task: Can Existing Algorithms Fulfill Clinical Requirements?

Being able to explain the prediction to clinical end-users is a necessity to leverage the power of AI models for clinical decision support. For medical images, saliency maps are the most common form of explanation. The maps highlight important features for AI model’s prediction. Although many saliency map methods have been proposed, it is unknown how well they perform on explaining decisions on multi-modal medical images, where each modality/channel carries distinct clinical meanings of the same underlying biomedical phenomenon. Understanding such modality-dependent features is essential for clinical users’ interpretation of AI decisions. To tackle this clinically important but technically ignored problem, we propose the MSFI (Modality-Specific Feature Importance) metric to examine whether saliency maps can highlight modality-specific important features. MSFI encodes the clinical requirements on modality prioritization and modality-specific feature localization. Our evaluations on 16 commonly used saliency map methods, including a clinician user study, show that although most saliency map methods captured modality importance information in general, most of them failed to highlight modality-specific important features consistently and precisely. The evaluation results guide the choices of saliency map methods and provide insights to propose new ones targeting clinical applications.

@article{jin2021one_map_not_fit_all, author = {Jin, Weina and Li, Xiaoxiao and Hamarneh, Ghassan}, title = {One Map Does Not Fit All: Evaluating Saliency Map Explanation on Multi-Modal Medical Images}, journal = {ICML 2021 Workshop on Interpretable Machine Learning in Healthcare}, year = {2021}, eprinttype = {arXiv}, eprint = {2107.05047}, timestamp = {Tue, 20 Jul 2021 15:08:33 +0200}, biburl = {https://dblp.org/rec/journals/corr/abs-2107-05047.bib}, bibsource = {dblp computer science bibliography, https://dblp.org}, project = {one_map}, } - arXiv

EUCA: the End-User-Centered Explainable AI FrameworkJin, Weina, Fan, Jianyu, Gromala, Diane, Pasquier, Philippe, and Hamarneh, GhassanJun 2021

EUCA: the End-User-Centered Explainable AI FrameworkJin, Weina, Fan, Jianyu, Gromala, Diane, Pasquier, Philippe, and Hamarneh, GhassanJun 2021TLDR: EUCA provides design suggestions from end-users’ perspective on explanation forms and goals.

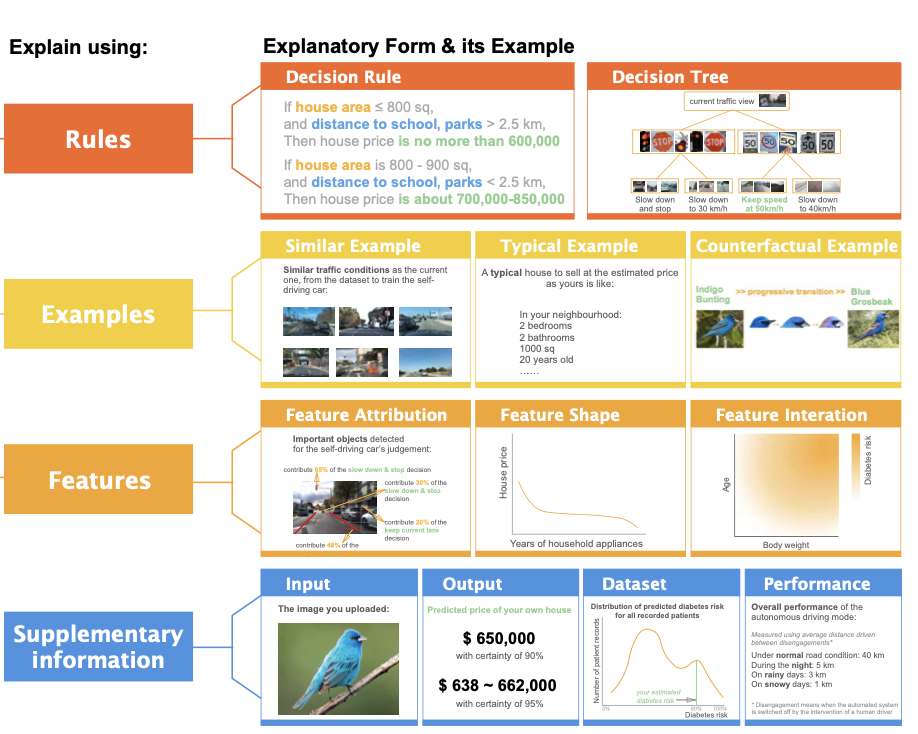

The ability to explain decisions to end-users is a necessity to deploy AI as critical decision support. Yet making AI explainable to non-technical end-users is a relatively ignored and challenging problem. To bridge the gap, we first identify twelve end-user- friendly explanatory forms that do not require technical knowledge to comprehend, including feature-, example-, and rule-based explanations. We then instantiate the explanatory forms as prototyping cards in four AI-assisted critical decision-making tasks, and conduct a user study to co-design low-fidelity prototypes with 32 layperson participants. The results confirm the relevance of using explanatory forms as building blocks of explanations, and identify their proprieties — pros, cons, applicable explanation goals, and design implications. The explanatory forms, their proprieties, and prototyping supports (including a suggested prototyping process, design templates and exemplars, and associated algorithms to actualize explanatory forms) constitute the End-User-Centered explainable AI framework EUCA, and is available at http://weinajin.github.io/end-user-xai. It serves as a practical prototyping toolkit for HCI/AI practitioners and researchers to understand user requirements and build end-user-centered explainable AI.

@article{jin2021euca, title = {{EUCA}: the End-User-Centered Explainable {AI} Framework}, author = {Jin, Weina and Fan, Jianyu and Gromala, Diane and Pasquier, Philippe and Hamarneh, Ghassan}, year = {2021}, archiveprefix = {arXiv}, primaryclass = {cs.HC}, project = {euca}, }

2020

- JNE

Artificial Intelligence in Glioma Imaging: Challenges and AdvancesJin, Weina, Fatehi, Mostafa, Abhishek, Kumar, Mallya, Mayur, Toyota, Brian, and Hamarneh, GhassanJournal of Neural Engineering Apr 2020

Artificial Intelligence in Glioma Imaging: Challenges and AdvancesJin, Weina, Fatehi, Mostafa, Abhishek, Kumar, Mallya, Mayur, Toyota, Brian, and Hamarneh, GhassanJournal of Neural Engineering Apr 2020TLDR: A review if the challenges and advances of implementing AI in clinical settings (including the interpretable AI challenge) in the neuro-oncology domain.

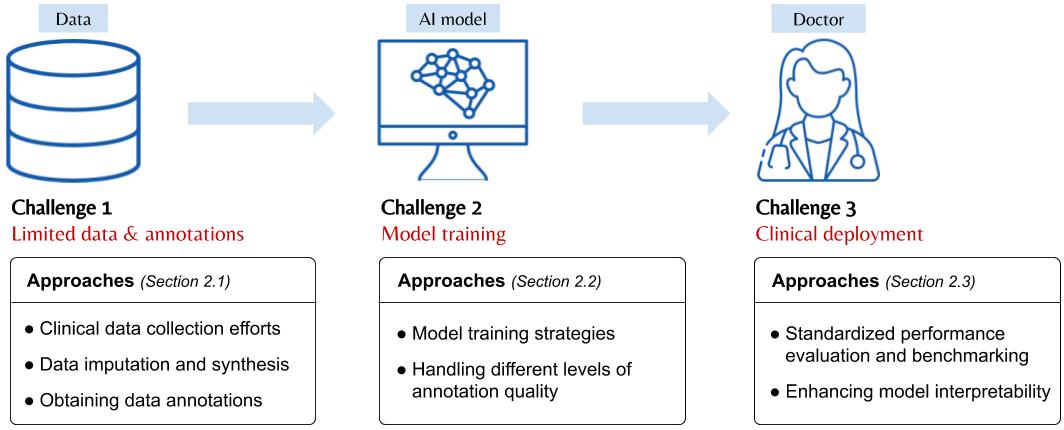

Primary brain tumors including gliomas continue to pose significant management challenges to clinicians. While the presentation, the pathology, and the clinical course of these lesions are variable, the initial investigations are usually similar. Patients who are suspected to have a brain tumor will be assessed with computed tomography (CT) and magnetic resonance imaging (MRI). The imaging findings are used by neurosurgeons to determine the feasibility of surgical resection and plan such an undertaking. Imaging studies are also an indispensable tool in tracking tumor progression or its response to treatment. As these imaging studies are non-invasive, relatively cheap and accessible to patients, there have been many efforts over the past two decades to increase the amount of clinically-relevant information that can be extracted from brain imaging. Most recently, artificial intelligence (AI) techniques have been employed to segment and characterize brain tumors, as well as to detect progression or treatment-response. However, the clinical utility of such endeavours remains limited due to challenges in data collection and annotation, model training, and the reliability of AI-generated information. We provide a review of recent advances in addressing the above challenges. First, to overcome the challenge of data paucity, different image imputation and synthesis techniques along with annotation collection efforts are summarized. Next, various training strategies are presented to meet multiple desiderata, such as model performance, generalization ability, data privacy protection, and learning with sparse annotations. Finally, standardized performance evaluation and model interpretability methods have been reviewed. We believe that these technical approaches will facilitate the development of a fully-functional AI tool in the clinical care of patients with gliomas.

@article{Jin_2020_review_brain_ai, author = {Jin, Weina and Fatehi, Mostafa and Abhishek, Kumar and Mallya, Mayur and Toyota, Brian and Hamarneh, Ghassan}, doi = {10.1088/1741-2552/ab8131}, journal = {Journal of Neural Engineering}, month = apr, number = {2}, pages = {21002}, publisher = {{\{}IOP{\}} Publishing}, title = {{Artificial Intelligence in Glioma Imaging: Challenges and Advances}}, volume = {17}, year = {2020}, }

2019

- IEEE VIS poster

Bridging AI Developers and End Users: an End-User-Centred Explainable AI Taxonomy and Visual VocabulariesJin, Weina, Carpendale, Sheelagh, Hamarneh, Ghassan, and Gromala, DianeIn IEEE VIS 2019 Conference Poster Abstract Apr 2019

Bridging AI Developers and End Users: an End-User-Centred Explainable AI Taxonomy and Visual VocabulariesJin, Weina, Carpendale, Sheelagh, Hamarneh, Ghassan, and Gromala, DianeIn IEEE VIS 2019 Conference Poster Abstract Apr 2019VIS Best Poster Presentation Award

TLDR: We conducted a literature review and summarize the end-user-friendly explanation forms as visual vocabularies. This is The precursor of the EUCA framework.

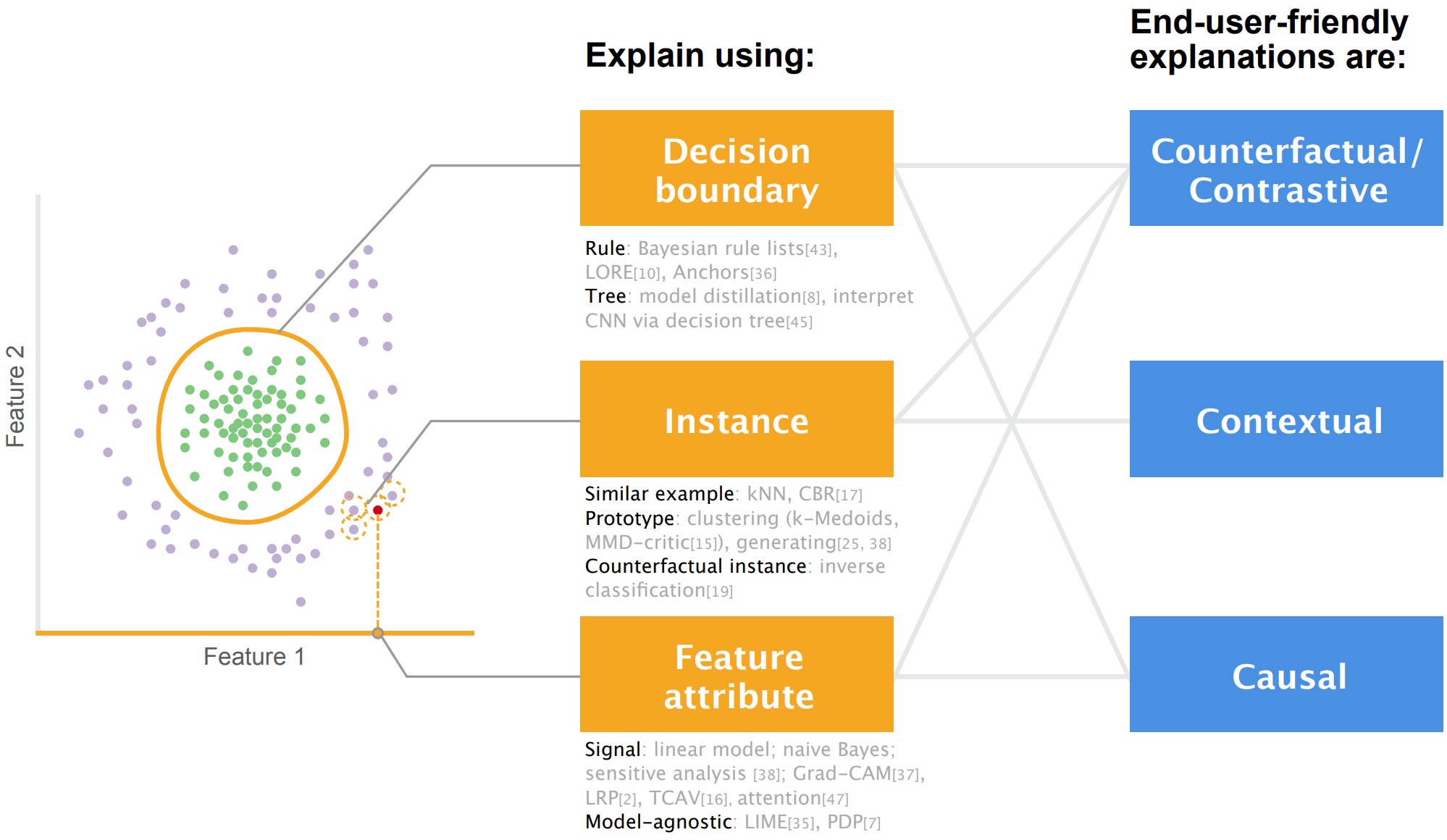

Researchers in the re-emerging field of explainable/interpretable artificial intelligence (XAI) have not paid enough attention to the end users of AI, who may be lay persons or domain experts such as doctors, drivers, and judges. We took an end-user-centric lens and conducted a literature review of 59 technique papers on XAI algorithms and/or visualizations. We grouped the existing explanatory forms in the literature into the end-user-friendly XAI taxonomy. It consists of three forms that explain AI’s decisions: feature attribute , instance, and decision rules/trees. We also analyzed the visual representations for each explanatory form, and summarized them as the XAI visual vocabularies. Our work is a synergy of XAI algorithm, visualization, and user-centred design. It provides a practical toolkit for AI developers to define the explanation problem from a user-centred perspective, and expand the visualization space of explanations to develop more end-user-friendly XAI systems.

@inproceedings{Jin2019vis, author = {Jin, Weina and Carpendale, Sheelagh and Hamarneh, Ghassan and Gromala, Diane}, keywords = {Explainable AI,Visualization}, booktitle = {IEEE VIS 2019 Conference Poster Abstract}, title = {{Bridging AI Developers and End Users: an End-User-Centred Explainable AI Taxonomy and Visual Vocabularies}}, year = {2019}, project = {euca}, video = {https://youtu.be/b5JnaSG1AYM}, }

2018

- IEEE GEMAutomatic Prediction of Cybersickness for Virtual Reality GamesJin, Weina, Fan, Jianyu, Gromala, Diane, and Pasquier, PhilippeIn 2018 IEEE Games, Entertainment, Media Conference (GEM) Apr 2018

TLDR: Applying machine learning to predict cybersickness.

Cybersickness, which is also called Virtual Reality (VR) sickness, poses a significant challenge to the VR user experience. Previous work demonstrated the viability of predicting cybersickness for VR 360°videos. Is it possible to automatically predict the level of cybersickness for interactive VR games? In this paper, we present a machine learning approach to automatically predict the level of cybersickness for VR games. First, we proposed a novel ranking-rating (RR) score to measure the ground-truth annotations for cybersickness. We then verified the RR scores by comparing them with the Simulator Sickness Questionnaire (SSQ) scores. Next, we extracted features from heterogeneous data sources including the VR visual input, the head movement, and the individual characteristics. Finally, we built three machine learning models and evaluated their performances: the Convolutional Neural Network (CNN) trained from scratch, the Long Short-Term Memory Recurrent Neural Networks (LSTM-RNN) trained from scratch, and the Support Vector Regression (SVR). The results indicated that the best performance of predicting cybersickness was obtained by the LSTM-RNN, providing a viable solution for automatically cybersickness prediction for interactive VR games.

@inproceedings{jin2018cybersickness, author = {Jin, Weina and Fan, Jianyu and Gromala, Diane and Pasquier, Philippe}, booktitle = {2018 IEEE Games, Entertainment, Media Conference (GEM)}, title = {Automatic Prediction of Cybersickness for Virtual Reality Games}, year = {2018}, volume = {}, number = {}, pages = {1-9}, doi = {10.1109/GEM.2018.8516469}, }

2017

- CSCW poster

A Collaborative Visualization Tool to Support Doctors’ Shared Decision-Making on Antibiotic PrescriptionJin, Weina, Gromala, Diane, Neustaedter, Carman, and Tong, XinIn Companion of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing Apr 2017

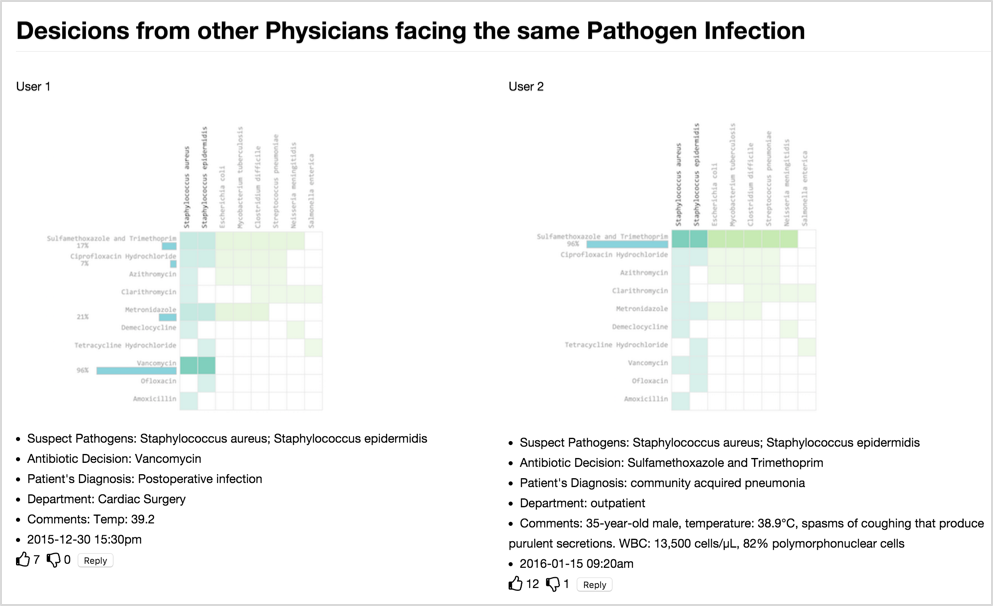

A Collaborative Visualization Tool to Support Doctors’ Shared Decision-Making on Antibiotic PrescriptionJin, Weina, Gromala, Diane, Neustaedter, Carman, and Tong, XinIn Companion of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing Apr 2017TLDR: A visualization prototype to support asynchronous collaboration among healthcare professionals.

The inappropriate prescription of antibiotics may cause severe medical outcomes such as antibiotic resistance. To prevent such situations and facilitate appropriate antibiotic prescribing, we designed and developed an asynchronous collaborative visual analytics tool. It visualizes the antibiotics’ coverage spectrum that allows users choose the most appropriate antibiotics. The asynchronous collaboration around visualization mimics the actual collaboration scenarios in clinical settings, and provides supportive information during physician’s decision-making process. Our work contributes to the CSCW community by providing a design prototype to support asynchronous collaboration among healthcare professionals, which is crucial but lacks in many of the present clinical decision support systems.

@inproceedings{jin2017cscw, author = {Jin, Weina and Gromala, Diane and Neustaedter, Carman and Tong, Xin}, title = {A Collaborative Visualization Tool to Support Doctors' Shared Decision-Making on Antibiotic Prescription}, year = {2017}, isbn = {9781450346887}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi-org.proxy.lib.sfu.ca/10.1145/3022198.3026311}, doi = {10.1145/3022198.3026311}, booktitle = {Companion of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing}, pages = {211–214}, numpages = {4}, location = {Portland, Oregon, USA}, series = {CSCW '17 Companion}, }

2016

- CHI poster

AS IF: A Game as an Empathy Tool for Experiencing the Activity Limitations of Chronic Pain PatientsJin, Weina, Ulas, Servet, and Tong, XinIn Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems Apr 2016

AS IF: A Game as an Empathy Tool for Experiencing the Activity Limitations of Chronic Pain PatientsJin, Weina, Ulas, Servet, and Tong, XinIn Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems Apr 2016CHI Student Game Competition Finalist

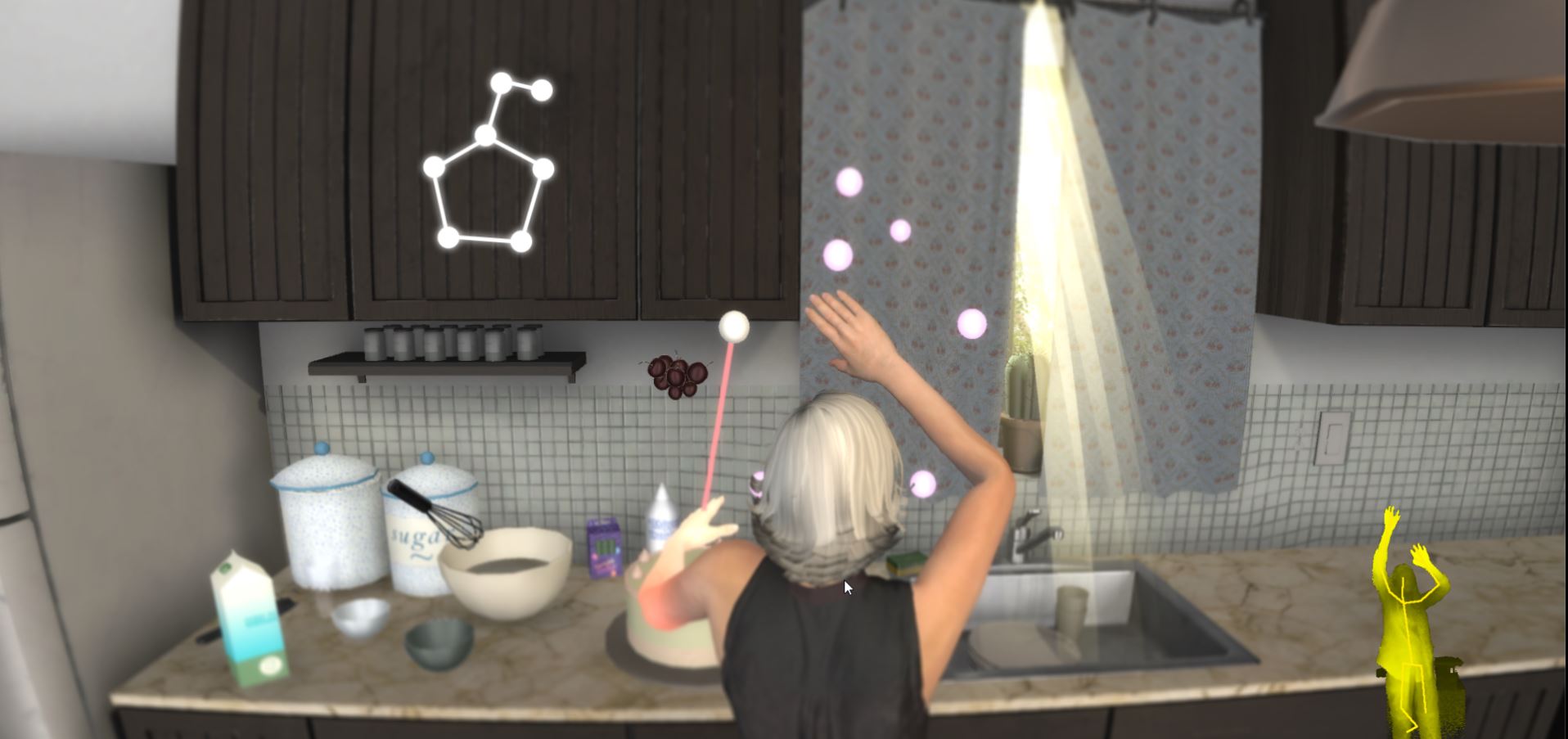

TLDR: The design of an empathy game for people with chronic pain.

Pain is both a universal and unique experience for its sufferers. Nonetheless, pain is also invisible and incommunicable that it becomes difficult for the public to understand or even believe the suffering, especially for the persistent form of pain: Chronic Pain. Therefore, we designed and developed the game – AS IF – to foster non-patients’ empathy for Chronic Pain sufferers. In this game, players engage with the connecting dots tasks through whole body interaction. After they generate the connection with their virtual body, they will experience a certain degree of activity limitation that mimics one of the sufferings of Chronic Pain. In this paper, we introduce the game design that facilitates the enhancement of empathy for Chronic Pain experience, and illustrate how this game acts as a form of communication media that may help to enhance understanding.

@inproceedings{jin2016asif, author = {Jin, Weina and Ulas, Servet and Tong, Xin}, title = {AS IF: A Game as an Empathy Tool for Experiencing the Activity Limitations of Chronic Pain Patients}, year = {2016}, isbn = {9781450340823}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi-org.proxy.lib.sfu.ca/10.1145/2851581.2890369}, doi = {10.1145/2851581.2890369}, booktitle = {Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems}, pages = {172–175}, numpages = {4}, keywords = {chronic pain experience, gaming for a purpose, mobility, stimulate empathy, body interaction}, location = {San Jose, California, USA}, series = {CHI EA '16}, }

2015

- IEEE GEM poster

Serious game for serious disease: Diminishing stigma of depression via game experienceJin, Weina, Gromala, Diane, and Tong, XinIn 2015 IEEE Games Entertainment Media Conference (GEM) Apr 2015

Serious game for serious disease: Diminishing stigma of depression via game experienceJin, Weina, Gromala, Diane, and Tong, XinIn 2015 IEEE Games Entertainment Media Conference (GEM) Apr 2015TLDR: The design of an empathy game for people with depression.

Stigma is a common and serious problem for patients who suffer from depression and other mental illnesses. We designed a serious game to address this problem. The game enables player to experience and strive to overcome the disempowering aspects of depression during the journey to recovery. Through the game’s interaction, player may gain a better understanding of the relationship between patient and the disease, which in turn may help to change the players’ moral model with the disease model, and thereby diminish the stigma of depression.

@inproceedings{jin2015likemind, author = {Jin, Weina and Gromala, Diane and Tong, Xin}, booktitle = {2015 IEEE Games Entertainment Media Conference (GEM)}, title = {Serious game for serious disease: Diminishing stigma of depression via game experience}, year = {2015}, volume = {}, number = {}, pages = {1-2}, doi = {10.1109/GEM.2015.7377256}, project = {likemind}, }